In general multiple MOS devices are made on a common substrate. As a result,the substrate voltage of all devices is normally equal. However while connecting the devices serially this may result in an increase in source-to-substrate voltage as we proceed vertically along the series chain,(Vsb1=0, Vsb20).Which results Vth2>Vth1

The motive of this group is to create awareness with in the student for VLSI/Semiconductor industry. If possible, I would like to create a link between experts and the students If every employee in Semiconductor Industry take the responsibility of 1 candidate (fresher or just entered into the industry) and spend couple of Hrs. in a week, then we can change the whole world with in few months. -Fresher

Friday, 27 February 2015

SKIN EFFECT

SKIN EFFECT IN SEMICONDUCTOR WIRES:

So far, we have considered the resistance of a semiconductor wire to be linear and constant. This is definitely the case for most semiconductor circuits. At very high frequencies however, an additional phenomenon called the skin effect comes into play such that the resistance becomes frequency-dependent.

High-frequency currents tend to flow primarily on the surface of a conductor with the current density falling off exponentially with depth into the conductor. The skin depth d is defined as the depth where the current falls off to a value of e -1 of its nominal value, and is given by

with f the frequency of the signal and U the permeability of the surrounding dielectric (typically equal to the permeability of free space, or m = 4p ´ 10-7 H/m). For Aluminum at 1 GHz, the skin depth is equal to 2.6 mm.

The obvious question is now if this is something we should be concerned about when designing state-of-the-art digital circuits?

The effect can be approximated by assuming that the current flows uniformly in an outer shell of the conductor with thickness d, as is illustrated in Figure for a rectangular wire. Assuming that the overall cross-section of the wire is now limited to approximately

we obtain the following expression for the resistance (per unit length) at high frequencies (f > fs):

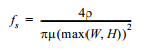

The increased resistance at higher frequencies may cause an extra attenuation and hence distortion of the signal being transmitted over the wire. To determine the on-set of the skin-effect, we can find the frequency fs where the skin depth is equal to half the largest dimension (W or H) of the conductor. Below fs the whole wire is conducting current, and the resistance is equal to (constant) low-frequency resistance of the wire. From Eq. (4.6), we find the value of fs:

A tie-high, tie-low circuit

Tie-high and tie-lo cells are used to avoid direct gate connection to the power or ground network. In your design, some cell inputs may require a logic0 or logic1 value. The spare cell inputs are also connected to ground or power nets, as you cannot leave them unconnected. Instead of connecting these to the VDD/VSS rails/rings, you connect them to special cells available in your library called TIE cells.(Note: All libraries may not have them).

A tie-high, tie-low circuit having a tie-high output and a tie-low output comprises a regenerative device to be coupled with both the tie-high and the tie-low outputs, and at least a PMOS device and a NMOS device to be coupled respectively with a high voltage and a low voltage.

An integrated circuit (IC) application does not always require all of its inputs to be used. The inputs that are not used should advantageously be locked in a single, stable logic state, and should not be left floating, because inputs having unpredictable or intermediate logic states may have unpredictable and unrepeatable influences on logic outcomes. This is a major issue that IC designers strive to eliminate.

For stability, therefore, small circuits are inserted into ICs. The small circuits have at least two outputs: one that is always high and another that is always low. These circuits are then used to tie IC inputs to either a high state or a low state. By implementing these circuits, inputs that are not used are locked in a single, stable logic state.

However, various issues exist in the conventional designs of these circuits. For example, many of these circuits comprise at least four transistors, which take up valuable real estate in ICs and may require additional, costly production steps. As another example, some of the designs of these circuits comprise three transistors, but such designs typically exhibit limited tolerance to electrostatic discharge (ESD).

Therefore, desirable in the art of integrated circuit designs are improved designs with smaller circuits having increased ESD tolerance that can be used to tie-high or tie-low an unused IC input.

Why do you use them?

Gate oxide is thin and sensitive to voltage surges. Some processes does not let you connect the gates directly to power rails since any surge in voltage, like an ESD event, can damage the gate oxide. Hence tie cells, which are diode connected n-type or p-type devices are used instead.The gates won’t be connected to either power or ground directly.That is the argument the foundries have for ESD protection against surges. Now, go check the schematic of the TIE cells in your standard cell library.. It is possible that it is just an inverter tied with input tied either to VDD(Ti-lo) or VSS (ti-high). In that case, the gate of the tie cell is still connected to power rails. However there are some uses for these types of cells. Leakage current is reduced in this configuration.Also,rewiring these in times of an ECO is easier, especially if you just want to swap 1’b1 for a 1’b0

DOUBLE PATTERNING

What is Double patterning?

Double patterning is a technique used in the lithographic process that defines the features of integrated circuits at advanced process nodes. It will enable designers to develop chips for manufacture on sub-nanometer process nodes using current optical lithography systems.

The downsides of using double patterning include increased mask (reticle) and lithography costs, and the imposition of further restrictions on the ways in which circuits can be laid out on chip. This affects the complexity of the design process and the performance, variability and density of the resultant devices.

What does double patterning do and why do we need it?

Double patterning counters the effects of diffraction in optical lithography, which happens because the minimum dimensions of advanced process nodes are a fraction of the 193nm wavelength of the illuminating light source. These diffraction effects makes it difficult to produce accurately defined deep sub-micron patterns using existing lighting sources and conventional masks: sharp corners and edges become blurs, and some small features on the mask won’t appear on the wafer at all.

Here the original mask is split-ed 2 different masks like mask A and Mask B in order to avoid diffraction effects like shorts and opens. i.e the cost of mask (lithography costs) increases as we move to double patterning and also introduces some extra DRC Rules in the design.

A number of reticle enhancement techniques have been introduced to counteract the diffraction problem as it has become more acute with each new process node.

Phase-shift masks: were introduced at the 180nm process node. They alter the phase of the light passing through some areas of the mask, changing the way it is diffracted and so reducing the defocusing effect of mask dimensions that are less than the wavelength of the illuminating light. The downside of using phase-shift techniques is that the masks are more difficult and expensive to make.

Optical-proximity correction (OPC) techniques work out how to distort the patterns on a mask to counter diffraction effects, for example by adding small ‘ears’ to the corners of a square feature on the mask so that they remain sharply defined on the wafer. The technique introduces layout restrictions, has a computational cost in design, and means that it takes longer and costs more to make the corrected masks.

Benefits and risks of FINFET & FD-SOI compared to Bulk transistor.

Benefits and risks of finFETs compared to Bulk transistor.

STRENGTHS

|

WEAKNESSES

|

Significant reduction in power consumption (~50% over 32nm)

|

Very restrictive design options, especially for analog – Transistor drive strength is quantized to multiples of a single fin width

|

Faster switching speed

|

Fin width variability and edge quality leads to variability in threshold voltage VT

|

Effective speed/power trade-off possible with multi-Vt

|

Extra manufacturing complexity and expense (~+3% according to Intel)

|

Availability of strain engineering

| |

OPPORTUNITIES

|

THREATS

|

Low power makes 20nm technology deployable for mobile applications

|

The potentially superior electrical performance and simpler manufacturing of fully depleted SOI

|

Increase CPU speeds beyond 4GHz

|

Benefits and risks of FD-SOI compared to Bulk transistor.

STRENGTHS

|

WEAKNESSES

|

Significant reduction in power consumption

|

High cost of initial wafers (~+10% over regular wafers, according to Intel)

|

Faster switching speed

|

Limited number of wafer suppliers

|

Easier, standard manufacturing process

|

Variability in VT due to variations in the thickness of silicon thin-film

|

Availability of back-biasing to control VT

|

Multi- VT more complex to implement

|

No doping variability

|

Lack of strain engineering

|

Layout library compatible with existing bulktechnologies

|

Thin channel limits drive strength

|

OPPORTUNITIES

|

THREATS

|

Simpler and more flexible alternative to finFETs if wafer cost issue can be overcome

|

High wafer cost threatens economic viability for wider market adoption

|

Better controllability for analog applications

|

MMMC Timing Closure

How to Close Timing with Hundreds of Multi-Mode/Multi-Corner Views

In the last decade we have seen the process of timing signoff become increasingly complex. Initial timing analyses at larger process nodes such as 180nm and 130nm were concerned mostly with operation at worst-case and best-case conditions. The distance between adjacent routing tracks was such that coupling capacitances were marginalized by ground and pin capacitance. Hence, engineers seldom looked at the potential issues associated with cross-coupling and noise effects. It was simply easier to add a small amount of margin than to analyze crosstalk.Starting at 90nm, and even more prominently at 65nm, an increase in coupling capacitance due to narrower routing pitches and taller metal segment profiles resulted in crosstalk effects becoming a significant concern. In addition, temperature inversion effects began to add to the additional number of analyses that were required to sign off a chip. What started as an analysis of two operational corners increased to at least three corners due to the increased delay at higher temperatures. At 45nm, process variation for metal layers added to the number of process corners that had to be considered when timing a design. Now at 28nm, the various PVT variations at the gate and interconnect levels, as well as the number of functional and test modes, has increased the number of mode corner combinations into the hundreds. For today’s designs, multi-mode/multi-corner (MMMC) timing analysis is a must.In this paper we’ll delve more into the details behind the explosion of timing views along with the need to analyze them and, more importantly, optimize timing under all the possible combinations. (A timing view is a combination of one mode and one corner for one timing check, such as setup or hold.) We’ll also take a look at today’s methodologies for addressing these issues and consider how things can be improved. The Necessity of Increasing Timing Analysis CoverageTypical timing analysis consists of examining the operation of the design across a range of process, voltage, and temperature conditions. In the past it was acceptable to expect that the operational space of a design could be bounded by analyzing the design at two different points. The first point was chosen by taking the worst-case condition for all three operating condition parameters (process, voltage, temperature) and the second point was chosen by taking the best-case conditions for the same three parameters.

Figure 1 - Operating Conditions Domain for Timing Signoff

Figure 1 - Operating Conditions Domain for Timing Signoff

As shown in Figure 1, we assumed that the worst slack for a design in our three-dimensional box was covered by just two points because delay was typically monotonic along the axis between the points. And since delay at earlier process nodes was dominated by cell delay, there was little thought to looking at the process range for interconnect layers; therefore, parasitic files were typically extracted at the nominal process.When process nodes moved to lower geometries like 65nm and 45nm, supply voltage levels were reduced to 1.0 volt or less and thus temperature began to have a contrarian effect on cell delay. Whereas in previous technology nodes delay increased with high temperature, temperature inversion effects began to increase delay at lower temperatures under worst- case voltage and process conditions. The direct result to timing signoff was an increase in the number of corners for signoff and optimization. The number of operating corners quickly doubled from two to four, or even five for those who insisted on timing test modes at typical operating conditions.As routing pitch began to decrease so did the amount of coupling capacitance that was seen, and with it the crosstalk delay effect. Process variations in metallization now had a non-negligible impact on the timing of the design. Plus, metal line width became small enough to impact the resistance of the wire with just a small amount of variation. Given that metallization is a separate process from base layer processing, engineers could not assume that process variation tracked in the same direction for both base and metal layers. Therefore, at 45nm and to a larger extent at 28nm, multiple extraction corners were now required for timing analysis and optimization. These extraction corners consisted of worst C, worst RC, best C, best RC, and typical.Summarizing the situation at 28nm, we have the following corners:

As shown in Figure 1, we assumed that the worst slack for a design in our three-dimensional box was covered by just two points because delay was typically monotonic along the axis between the points. And since delay at earlier process nodes was dominated by cell delay, there was little thought to looking at the process range for interconnect layers; therefore, parasitic files were typically extracted at the nominal process.When process nodes moved to lower geometries like 65nm and 45nm, supply voltage levels were reduced to 1.0 volt or less and thus temperature began to have a contrarian effect on cell delay. Whereas in previous technology nodes delay increased with high temperature, temperature inversion effects began to increase delay at lower temperatures under worst- case voltage and process conditions. The direct result to timing signoff was an increase in the number of corners for signoff and optimization. The number of operating corners quickly doubled from two to four, or even five for those who insisted on timing test modes at typical operating conditions.As routing pitch began to decrease so did the amount of coupling capacitance that was seen, and with it the crosstalk delay effect. Process variations in metallization now had a non-negligible impact on the timing of the design. Plus, metal line width became small enough to impact the resistance of the wire with just a small amount of variation. Given that metallization is a separate process from base layer processing, engineers could not assume that process variation tracked in the same direction for both base and metal layers. Therefore, at 45nm and to a larger extent at 28nm, multiple extraction corners were now required for timing analysis and optimization. These extraction corners consisted of worst C, worst RC, best C, best RC, and typical.Summarizing the situation at 28nm, we have the following corners:

- Temperature: Tmax/Tmin

- Process (base layer): Fast-Fast, Fast-Slow, Slow-Fast, Slow-Slow, Typical

- Process (metal): Cmax, Cmin, Typical, RCmax, RCmin

- Voltage: Vmin, Vmax

At the 20nm process node things become even more complicated due to double-patterning technology (DPT). Because lithography limitations require two masks for the same layer, the masks must be precisely aligned such that the spacing between patterns is consistent across the die. Unfortunately, there will always be some phase shift in the masks relative to each other and it may not be possible to predict what that phase shift is, which results in more variation. One way to handle that is by extracting more corners to simulate the bounds of the shift. The end result is a possible doubling of the corners going from 28nm to 20nm. Even if you doubt the number of timing analyses required at 28nm, it isn’t going to get any better at 20nm. It’s just going to get worse.Analysis is Only Half of the StorySo far we’ve discussed the reasons for an exploding number of corners required to verify a design for timing. Those reasons are driven by lower rail voltages, variations in the process of metal layers, and variations due to new lithography techniques that are required to process patterns at 20nm and below. Once we’ve found a way to get over the mountain of timing analyses, it would seem our problems are solved. Absolutely not. We’ve just hit the tip of the iceberg.Processing the results and fixing timing violations is where the real work begins. The first challenge is in presenting a consolidated view of the design to the timing optimizer. When analysis involves more than 100 different views, the consolidation of the results becomes critical in terms of data management and capacity.Many customers today have their own home-brewed scripts to deal with managing the results from different views. Figure 3 shows a typical flow where designers insert their own optimization utility to close the design. These utilities range from simple consolidation of native tool reports to complex customized construction of timing and slack reports utilizing exported timing attributes. For scripts that simply merge reports, the resulting data can be more than an implementation style tool can handle.Today, most tools that manage timing optimization generally operate on a subset of the views needed for signoff due to capacity limitations. There are complex solutions that can parse timing attributes into more efficient representations of the design timing, but the overhead of maintenance and the expertise required may be beyond the reach of the average engineer.

The second complication to optimization is an old problem that continues to frustrate engineers today. That is the miscorrelation between implementation timing and signoff timing. The only alternative to mitigating this problem is by margining the design during the implementation phase so as not to incur violations during signoff timing. With power being one of the most critical design metrics today, adding excessive gates to ensure minimal violations at signoff is a poor tradeoff that leads to power-hungry designs, more area, and fewer chips per wafer. The iterations required to close timing differences between implementation and signoff timing are further complicated by the fact that signoff timers are not physically aware. Placement of inserted buffers is left to the implementation environment as a post-processing step for engineering change order (ECO) generation. Often the placement of new cells is dramatically different from what is assumed by the optimization algorithms due to the placement of existing cells and lack of vacant space in highly utilized designs. This results in a significant mismatch between the assumed interconnect parasitics during optimization and the actual placement and routing of the ECO.Figure 4 shows the potential uncertainty involved in cell placement that affects assumptions made in a traditional signoff ECO flow. In this figure, both Case 1 and Case 2 result in added uncertainty to the final timing result. However, Case 1 is much worse because it impacts the timing of paths that may have already met timing. Not only is the impact on timing unknown, but it is also impossible to tell which paths will be affected by the movement of cells to legalize placement. What previously may have been a timing-clean view (mode, corner combination) could potentially have many violations after routing and placement legalization of the inserted cells.

Addressing the Gaps in Today’s Signoff ECO FlowsWe’ve examined some of the factors that have increased the complexity of timing signoff and design closure. An increasing number of analysis views and differences between implementation and signoff timers have created numerous obstacles to creating a design that is timing “clean.” The biggest issues are:

- An exploding number of analysis runs that need to be performed at 28nm and below

- Runtime of both parasitic extraction and timing analysis

- The ability of EDA tools to look across hundreds of views during timing optimization

- Timing miscorrelation between implementation and signoff

- The lack of physical awareness when performing timing optimization and ECO generation

ConclusionThe preceding list of features are all well within the reach of today’s EDA technology. Integrating signoff and implementation tools has been discussed for many years and there have been attempts to make it happen. The key to making it work is to have a system that is built with integration in mind. This includes common timing engines and unified databases so that the exchange of data from implementation to extraction to timing is a seamless process and results are consistent. Patching together long-standing point tools is generally a suboptimal solution that neither delivers on efficiency or runtime performance.Fortunately for the user, there are timing closure solutions on the market today— such as the Encounter Timing System from Cadence—that address the items listed. Customers should expect their EDA vendors to provide solutions that address today’s signoff timing closure deficiencies in the overall design flow process. Otherwise, with the movement to smaller process nodes, this phase in the design flow could become the longest step to taping out a design.

TEMPERATURE INVERSION

TEMPERATURE INVERSION

Traditionally MOSFET drive current (ID) reduced with increasing temperature. Hence for most cases worst case delay corner used to be high temperature (100C or higher, depending on target application). With transistor scaling, VDD and Vt have scaled but not as aggressively as the rest of the parameters (such as gate oxide thickness, channel length etc.). If you look at the MOSFET drive current equation,

So, ID varies linearly as u (mobility) and (VGS-VT)2 or the overdrive voltage. Both mobility and Vt reduce with increasing temperature and viceversa. Interestingly, current is dependent on difference between Vg and Vt. So there is contention between mobility and the (Vg-Vt) term, and the one with more impact on the final current will determine if drive current increases or decreases with increasing temperature. For scaled nodes, i.e. at lower technologies VDD has scaled to values like 0.9V or lower, while Vt has not scaled as aggressively (0.3~0.4V), because of which even though mobility improves at low temperature, the increasing Vt and hence reducing (Vg-Vt) has a greater impact on the current, resulting in less drive current at lower temperature than at higher temperature. Hence, at scaled technology nodes, the low temperature becomes the SLOW corner, not HIGH temperature, especially for the HVT devices. This phenomenon is known has inverse temperature dependence in MOSFETs.

Crosstalk

Switching of the signal in one net can interference neighboring net due to cross coupling capacitance. This affect is known as cross talk. Crosstalk can lead to crosstalk-induced delay changes or static noise.

Double spacing => more spacing =>less capacitance =>less cross talk

Multiple vias => less resistance =>less RC delay

Shielding => constant cross coupling capacitance =>known value of crosstalk

Buffer insertion => boost the victim strength.

Net ordering => in same metal layer change the net path.

Layer assignment => Change the metal layer of two nets if possible. (One s/g in metal 3 and one signal in metal 4).

Difference between DIBL AND GIDL.

GIDL: Gate induced drain leakage is a leakage mechanism from the gate-drain overlap region caused when the Drain voltage is very high and Gate voltage is very low.The reverse biased pn junction will undergo band to band tunneling in which the electrons tunnel from the valence band of the n-type tunnel into the conduction band of the p-type and the holes tunnel vice-verse. This results in a leakage current through the gate oxide.

DIBL: Drain induced barrier lowering is related to the reduction in the threshold voltage of the transistor due to the large depletion region created by the Drain potential.U can think of it as-since already a part of the region under the gate is depleted by the drain, only a little amount of gate potential is needed to complete depletion in the rest of the area.This means a lower threshold voltage.

What is the Difference between synchronizer and lockup latch?

What is the Difference between synchronizer and lockup latch?

A Synchronizer handles situations where data needs to move from faster clock domain to slower clock domain or vice-versa,whereas lockup latch doesn't allow data to cross between two clock domains.

Lockup latch is used to add a half cycle to avoid setup/hold issues between two flops.

Lock-up latches are used to allow scan chains to cross the clock domains. They mitigate the skew between two clock domains to ensure data is shifted reliably on the scan chain.

Latch-up in CMOS Integrated Circuits

Latch-up in CMOS Integrated Circuits

Introduction

In CMOS fabrication, latch-up is a malfunction which can occur as a result of improper design. Latch-up in a CMOS integrated circuit, causes unintended currents will possibly resulting with the destruction of the entire circuit, thus, it must be prevented.

Explanation of the phenomena

Explanation of the phenomena

Figure 1 shows the cross section of a two-transistor CMOS integrated circuit where the nMOS is on the left hand side and the pMOS on the right hand side. As it can be seen from the figure, we can talk about a parasitic pnp transistor from source of the pMOS to the p-substrate. Furthermore a parasitic npn transistor is formed from source of the nMOS, p-substrate and the n-well. These parasitic transistors and finite resistances of n-well and p-substrate can be shown like Figure 2 [1]. Equivalent circuit of these parasitic bipolar transistors is given in Figure 3 [1].

Figure 1 Cross section of a CMOS IC

Figure 2 Parasitic bipolar transistors in a CMOS process

Figure 3 Equivalent circuits formed by the parasitic transistors

As it can be clearly seen from the equivalent circuit, there is a positive feedback loop around Q1 and Q2. If a parasitic current flows through the node X and raise Vx, Q2 turns on and IC2 increases resulting VY decrease. This increases IC1 and consequently Vx increases much more. If the loop gain is equal to or greater than unity, this situation continues until an enormous current flow through the circuit in other words, until the circuit is latched up. [1]

Preventing Latch-up

As explained above, the loop gain of the equivalent circuit shown in Figure 3 should be lesser then unity in order to prevent latch-ups. Consequently, both of process and design engineers should take steps for latch-up prevention. Doping levels, and the other design aspects should be arranged properly in order to have low parasitic resistances and current gain of bipolar transistors. There are specific design rules to prevent latch-ups in different technologies [1].

Conclusion

As its results may be fatal for the circuit, preventing latch-up in CMOS integrated circuit design is essential for a proper operation.

References

References

[1] Razavi, B., 2000, Design of Analog CMOS Integrated Circuits, p. 628

Why Scan Frequency should be less than Clock Frequency?

Why scan frequency should be low?

During Testing Circuit activity increases during testing and leads to high test power dissipation. i.eDrop in power supply voltage due to IR dropDrop in voltage lowers current flowing through transistorTime taken to charge load capacitor increases.Causes

- Ground bounce

- Excessive heating =>Permanent damage in circuit

- Good chip labeled bad => unnecessary yield loss

- stuck and delay faults

Clock Speed-Up under Power Constraints

- Test clock frequency lowered to reduce power dissipation

- F test <= (2 * power budget) / CV 2œ peak >= (½)CV 2œ peak F test

- If œ = œ peak/ I then f= i * f test without exceeding Pbudget

- C, V constant for a circuit

Thursday, 26 February 2015

Congestion control methods

Congestion needs to be analyzed after placement and the routing results depend on how congested your design is. Routing congestion may be localized. Some of the things that you can do to make sure routing is hassle free are:

Placement blockages: The utilization constraint is not a hard rule, and if you want to specifically avoid placement in certain areas, use placement blockages.

Macro-padding: Macro padding or placement halos around the macros are placement blockages around the edge of the macros. This makes sure that no standard cells are placed near the pin outs of the macros, thereby giving extra breathing space for the macro pin connections to standard cells.

Cell padding: Cell Padding refers to placement clearance applied to std cells in PnR tools. This is typically done to ease placement congestion or reserve some space for future use down the flow.

Maximum Utilization constraint (density screens): Some tools let you specify maximum core utilization numbers for specific regions. If any region has routing congestion, utilization there can be reduced, thus freeing up more area for routing.

set_congestion_options -max_util 0.6-coordinate{837 114 1103 918}

Subscribe to:

Comments (Atom)